| Version 3 (modified by , 8 years ago) ( diff ) |

|---|

How-To use VisIt on JURECA

Hardware Setup

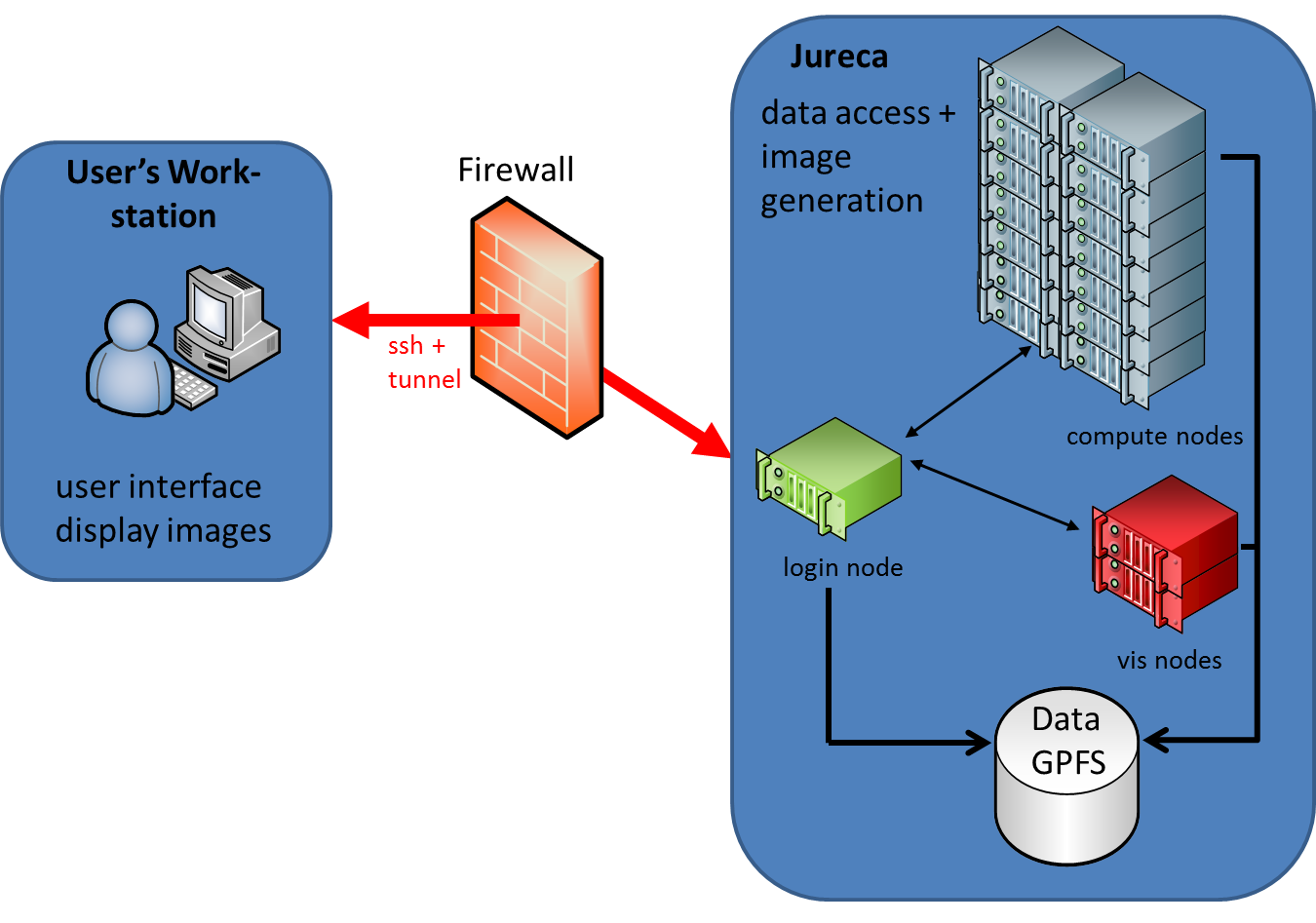

The following image illustrates the general JURECA hardware setup used in a distributed visualisation session.

The user has its own workstation, which acts as a frontend to setup the visualisation pipeline and to adjust parameters of the visualisation process. Of course also the rendered image is shown on the users workstation.

The right side illustrates the major components of the JURECA cluster: login, compute and visualisation nodes and the GPFS storage system (JUST), which holds the data to be visualised.

The user has access to the resources of JURECA only via an ssh connection to a login node. In between is a firewall which only allows access to the system by ssh (port 22). All other network ports are blocked. Therefore all communication related to the distributed visualisation environment has to be tunneled over ssh.

In general, a distributed visualisation package, like VisIt, consists of a viewer/GUI component and a parallel server/engine component.

These software components can be mapped onto the hardware components in different ways. The three most commenly used scenarios are discussed in the following sections.

In general, a distributed visualisation package, like VisIt, consists of a viewer/GUI component and a parallel server/engine component.

These software components can be mapped onto the hardware components in different ways. The three most commenly used scenarios are discussed in the following sections.

Remote Visualisation on a JURECA Vis Node

In this scenario only the VisIt viewer (GUI) is running on the users workstation. The data processing and image generation is performed by one or more VisIt engines (servers) running on one or more JURECA vis nodes.

Like the compute nodes also the vis nodes have to be allocated by the JURECA batch system. That means that this visualisation scenario may not start instantly, if all vis nodes are already taken by other users!

In this case one has to wait for free vis nodes. At the moment, the reservation of vis nodes, which would guarantee the access to vis nodes at a certain point in time is not yet possible. This feature is under development and will be available in the future.

All network traffic (e.g. sending images from the VisIt server to the viewer) runs across the login node and has to be tunneled.

VisIt has a build in functionality to connect to the login node and to start the necessary batch job, so the user does not to need login manually to JURECA by an ssh shell and start batch jobs for VisIt there manually. Nevertheless VisIt uses ssh under the hood.

Therefore an important prerequesite to get this scenario running is a flawless working ssh connectivity between the users workstation and JURECA! If a Windows operating system is used, it may be necessary to use a ssh key agent like Pageant, which is a part or the PuTTY ssh client for Windows.

To get this scenario running, the following steps have to be performed:

1. Check ssh connectivity

Make sure that your workstation has ssh connectivity to the login nodes of JURECA. On a Linux workstation just check that you can login to JURECA with the regular ssh command.

On a Windows system one needs to install an ssh client first, e.g. PuTTY together with the ssh agent Pageant. Maybe you also want to install PuTTYgen to generate an ssh keys.

Start Pageant and load your private ssh key into Pageant. Pageant will ask you for your passphrase, which unlocks the key. Now VisIt will be able to connect to JURECA using the (unlocked) private key stored in Pageant.

2. Install VisIt on Your Local Workstation

Download VisIt and install it on your worstation. Your local VisIt version must match the version installed on JURECA, which is VisIt 2.10. So you have to install VisIt 2.10 to get the setup running.

3. Create Proper Host Configuration

To work in a distributed setup, VisIt needs information about the remote cluster and about how to launch processes on the cluster. These settings are called "Host profile" and can be adjusted in VisIt under Options --> Host profiles.

To keep thinks simple, we have created a predefined host profile for JURECA, which can be downloaded here.

Attachments (14)

- Cluster_VisIt_3D.png (211.6 KB ) - added by 8 years ago.

- Cluster_VisIt_2D.png (193.8 KB ) - added by 8 years ago.

-

Hardware_Setup.png

(203.0 KB

) - added by 8 years ago.

JURECA hardware setup

- putty2.jpg (72.3 KB ) - added by 8 years ago.

- putty3.jpg (72.8 KB ) - added by 8 years ago.

- remote_desktop.jpg (279.9 KB ) - added by 8 years ago.

- Trac_Setup_VNC.png (211.9 KB ) - added by 8 years ago.

- vis_login_compute_parallel.png (141.4 KB ) - added by 8 years ago.

- vis_login_node.png (213.9 KB ) - added by 8 years ago.

- vis_login_vis_parallel.png (211.5 KB ) - added by 8 years ago.

- vis_batch_node.png (124.8 KB ) - added by 8 years ago.

- host_jureca.2.xml (12.8 KB ) - added by 8 years ago.

-

host_jureca.xml

(12.8 KB

) - added by 8 years ago.

JURECA hostprofile

- host_jureca_vis_batch_node.xml (3.6 KB ) - added by 8 years ago.

Download all attachments as: .zip